Environment Types In AI

| Fully Observable | Partially Observable |

| An agent Can Always See entire state of environment. Example : Chess | An agent can never see entire state of environment. Example : Card Game |

| Deterministic | Stochastic |

| An agent’s current state and selected action can completely determine the next state of environment. Example : Tic tac toe | A stochastic environment is random in nature and cannot determined completely by agent. Example : Ludo (Any dice game) |

| Episodic | Sequential |

| 1. Only current percept is required for the action. 2. Every episode is independent of each other. Example : Part picking Robot | 1. An agent requires a memory of past actions to determine the next best actions. 2. The current decision could affect all future decisions. Example : Chess |

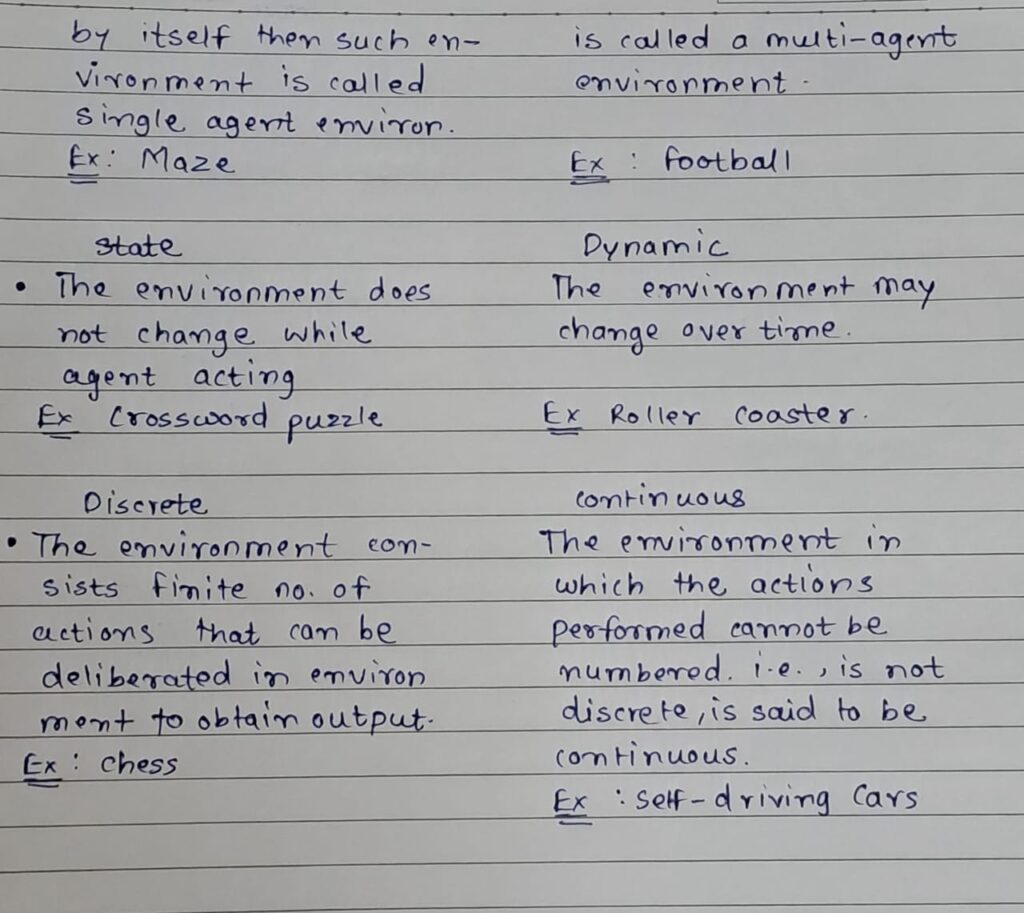

| Single Agent | Multi Agent |

| If only one agent is involved in environment and operating by itself then such environment is called single agent environment. Example : Maze | If multiple agents are operating in an environment then such environment is called a multi agent environment Example : Football |

| State | Dynamic |

| The environment does not change while agent is acting . Example : Crossword Puzzle | The environment may change over time. Example : Roller coaster |

| Discrete | Continuous |

| The environment consists finite no of actions that can be deliberated in environment to obtain output. Example : Chess | The environment in which the actions performed can not be numbered. i.e. , is not discrete, is said to be continuous. Example : Self – driving car |

In the context of Artificial Intelligence (AI), “environment” refers to the external context or world in which an AI agent operates and interacts. It’s a fundamental concept in AI, particularly in fields like machine learning and reinforcement learning, where the agent’s behavior is shaped by the environment

Here’s an overview of what the environment entails in AI:

1. Reinforcement Learning (RL) Environment

- In RL, the environment is the external system the agent interacts with to make decisions. The agent receives input from the environment (known as a “state”), takes actions, and receives feedback in the form of rewards or penalties.

- Key Components:

- State: The current condition or configuration of the environment.

- Action: A decision the agent takes to affect the environment.

- Reward: Feedback from the environment about the success or failure of an action.

- Episode: One full cycle of interaction between the agent and the environment.

2. Simulated vs. Real-World Environments

- Simulated Environments: These are artificially created environments where AI systems are trained. Examples include game simulations (e.g., OpenAI’s Gym) and virtual worlds. They are used because they provide safe, controlled, and cost-effective settings for training AI models.

- Real-World Environments: In contrast, real-world environments are the actual physical or digital systems AI operates in. Examples include robotics, self-driving cars, and AI in healthcare systems.

3. Environment in Machine Learning

- In supervised and unsupervised learning, the “environment” can be thought of as the data the AI model is trained on. The model learns patterns from this data to make predictions or decisions in a similar environment (i.e., new data it encounters).

- The quality, diversity, and size of the dataset (environment) directly affect the performance of the AI model.

4. Dynamic vs. Static Environments

- Static Environment: The environment does not change as the AI agent acts upon it. This is common in many supervised learning tasks where the data (environment) is pre-defined and doesn’t evolve.

- Dynamic Environment: The environment changes over time, potentially as a result of the agent’s actions. This is common in real-time AI systems like self-driving cars, where the environment is constantly changing and the agent must adapt continuously.

5. Environment in Robotics and Autonomous Systems

- For robots and autonomous agents, the environment refers to the physical world they navigate and interact with. The challenges here involve dealing with uncertainty, noise, and the complexity of the real-world environment (e.g., obstacles, changing conditions, unpredictable human actions).

6. Open-World vs. Closed-World Environments

- Open-World Environment: The AI system can encounter situations it hasn’t been trained on, requiring it to generalize from limited knowledge.

- Closed-World Environment: The AI system operates within a well-defined, limited set of conditions or scenarios.

7. Multi-Agent Environments

- Some environments involve multiple AI agents interacting with each other or with human agents. In such environments, the agent’s actions can affect others, and the agent must consider not only the environment’s response but also the behavior of other agents.

8. Ethical and Social Environments

- As AI is deployed in real-world applications (e.g., healthcare, finance, criminal justice), the environment also includes social, ethical, and regulatory contexts. AI must operate responsibly within these environments, taking into account societal norms, privacy concerns, fairness, and biases.

Summary

The “environment” in AI encompasses the external system that an AI agent interacts with, learns from, and makes decisions within. It plays a crucial role in determining the success of AI models, influencing their training and real-world applicability.